AI as Translator: How art generated by Artificial Intelligence can decode non-human messages.

We have witnessed a time when Artificial Intelligence, or AI, could easily write a poem and make a painting. What if I told you there was a machine that could learn other animals' language and make you communicate with animals just like in a Disney movie?

Helena Nikonole, a young artist and independent curator based in Istanbul, is currently working in the field of new media and artificial intelligence. Her work focuses on utopian scenarios of the post-human future and dystopian present. On September 17, she was invited to present her project Bird Language at Leiden University.

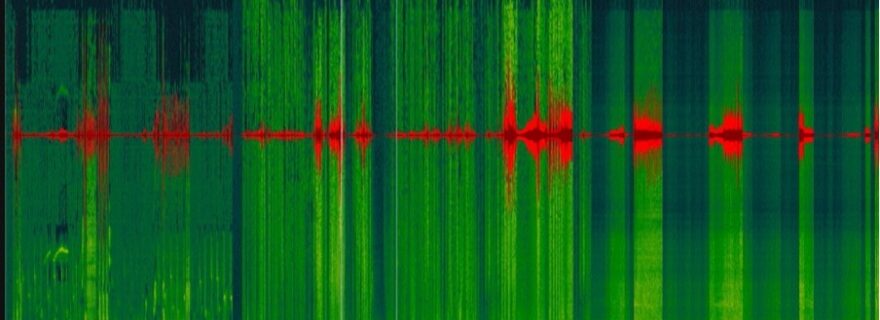

Bird Language is a project exploring the possibilities of talking with birds via Artificial Intelligence within the context of bio-semiotics (a field in which linguistics is studied in the realm of biology). The premise of Nikonole’s project is that the bird's language has grammar and patterns that can be statistically analyzed by machine. In the first stage of the project, Nikonole's team trained a neural network on the sounds of nightingales to create communication between birds and Artificial Intelligence in collaboration with scientists, ornithologists and AI experts.

The second stage of the project consisted of the creation of an AI translator from bird language to human language. AI, in this project, seems to understand the bird's language before any biologist does. In this case, AI is not only a mediator or interface between human beings and birds but rather an organ. In other words, AI plays a role as the direct communicator with birds. To explore more about this project, please watch the video Bird Language on YouTube.

The human fascination with animal vocalizations goes back a long way. We share our planet with more than 8 million species, but we only understand human language. Birds have many calls, andthe social platform Twitter has even used the metaphor of birds' sounds to describe short posts. However, most experts do not call it a language, as no animal communication meets the linguistic definition of language. Even though there are doubts about whether the call of a bird can be considered as language, it is believed that they make a different sound to convey different messages.

Nikonole’s project reveals the possibility that AI may help us keep various species safe and healthy in the future as it can better "understand" the sounds of natural world than humans. Although as humans, we can’t know how animals experience the world, but AI may help us find how other animals experience similar emotions nd how they communicate the emotions with other members of their species. We have to keep in mind that many animal species have sophisticated societies, but their calls cannot be paralleled with human languages. Just like linguistics, only by studying these calls within a specific context can meaning be established.

After the presentation, with the help of Professor Ksenia Fedorova, Nikonole also organized a workshop on the theme of 'AI & Art: Aesthetics and Politics of Artificial Neural Networks' as well as an exhibition at EST Art Foundation. In the workshop, the artist demonstrated how AI algorisms work and how we as individuals can take AI as a tool to generate art pieces based on our own ideas. Following her instructions, every participant successfully generates images by inserting various keywords.

The machine-learning experience impressed the participants and at the end of the workshop, there was a heated discussion about the rationale and potential of the application of AI in other art activities. After the workshop, everyone went to the exhibition held at the EST Art Foundation, here, Nikonole and Fedorova again talked about the inspiration of Helena’s project and the controversies around AI-generated art with a group of audience. During the exhibition, visitors not only wandered around the white space but also put on the headphone to explore the conversation between AI and birds with their senses.

The new artistic approaches that AI brings and the critical approach to technology will still remain in the history of AI. Just like Helena's project, the AI can identify what makes a random change versus a semantically meaningful one, and it brings us closer to meaningful communication. It is having the AI speak the language and make the image, even though we human yet don't know what it means.

Xi Yang is a master’s student of Art History at Leiden University, following the track ‘Arts & Culture.’ Currently, she is also working as a freelance designer and doing eco-marketing at Ocean Health. Her works are mainly focused on digital media and sub-culture, and her interest of research lies in the relation between art and technology.

Further reading and references:

Eric Mack. “Here Are 27 Expert Predictions on How You’ll Live With Artificial Intelligence in the Near Future.’’ inc. magazine. The 12th of December, 2018.

0 Comments